With the loss of a lot of well-used OSINT tools recently, it’s time to be reminded about what really makes for an effective OSINT investigation and that although tools come and go, the principles of good investigation don’t change. Good OSINT investigations start with a decision about method and parameters first – what do I need to know? – and only then asking about the question about how do I find out? Starting with your favourite tools and then asking – what do they tell me? will always end with difficulties and missed lines of enquiry. If you only have a big hammer, every problem looks like a nail. If you only have Stalkscan, every OSINT question becomes find where x was tagged with y. Tools do matter of course, and the loss of the graph search has undoubtedly made some kinds of enquiry more difficult, but learning about good method is more important for long-term success than being reliant on tools that often have a very short shelf-life.

Example: Bellingcat’s Method

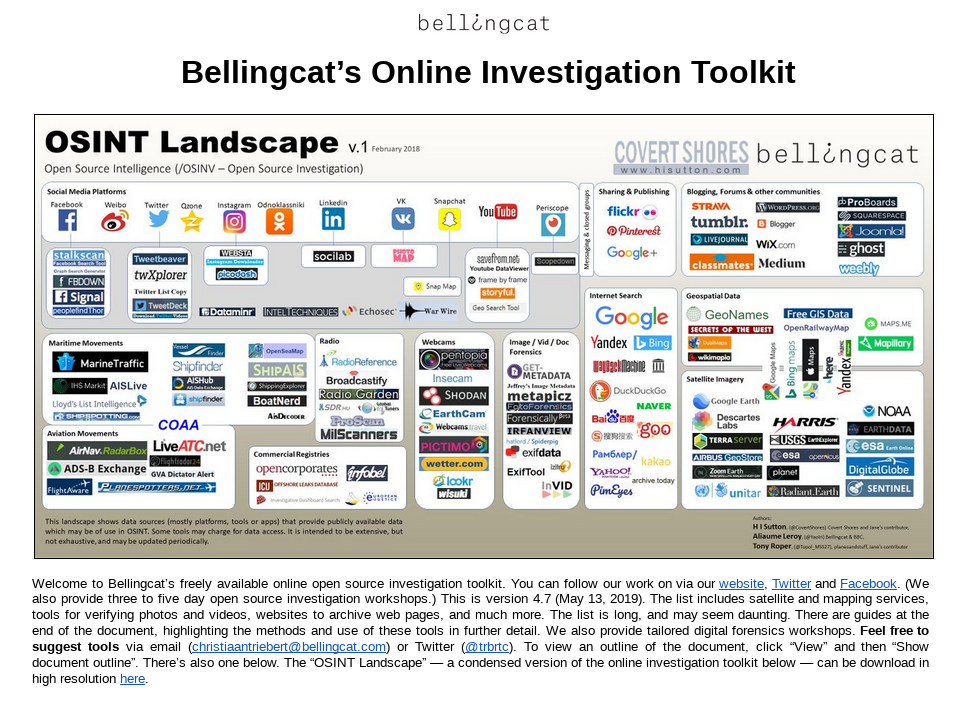

To illustrate this point I want to write a little about Eliot Higgins‘ article in the New York Times that asked Was Iran Behind The Iran Tanker Attacks? Eliot is the founder of Bellingcat and he and his team are successful digital investigators. So of course everyone wants to know what tools and resources do Bellingcat use? Bellingcat are open and their list of commonly-used tools is available for all the world to see. But anyone can access the same tools and datasets – so what makes Bellingcat or other investigators successful where others are not? Experience counts for a lot of course, but part of the reason for the success of Bellingcat is that they take a disciplined and methodical approach to their investigations to find out the correct answers. The toolset is only a means to achieve this goal. The Iran Tanker article is a short and simple example of how a logical investigative method asks the right questions of the data and draws the correct conclusions – even if they are not as sensationalist as the claims made by others.

True, False, Or Cannot Say

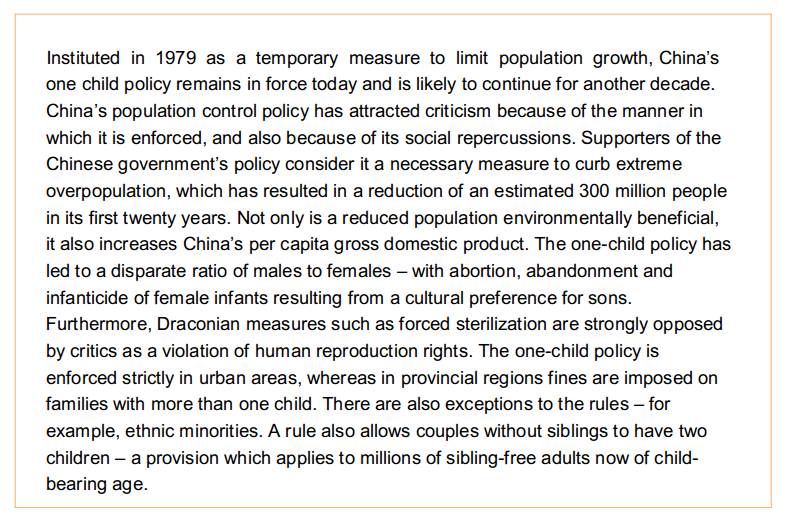

Before looking at Eliot’s article, here is a simple quiz (source) that explains my point a little more clearly. Here’s some information about China’s one-child policy:

Now comes a question with three possible answers:

China’s one-child policy increases the country’s wealth.

a) True b) False c) Cannot Say

Reading through the information we are given, it says that a reduced population increases per capita GDP – so the answer must be true, right? Read the information again: we are only told that a reduced population increases the per capita GDP. It goes beyond the limits of the information provided to say with certainty that China’s one-child policy increases or decreases the country’s wealth. So the only correct and logical answer to the question is “cannot say“.

Mind The Gaps

For an OSINT investigation to be accurate and truthful it is necessary to apply the same strict logic to the sources of information we have at our disposal and to draw conclusions only when they are supported by the facts we uncover. There will always be gaps where we have to say “don’t know”, but it is always necessary to be disciplined before making a conclusion about the data we have gathered. Does this data truly support the conclusion that I am arguing for? Yes or No? Or is the real answer “cannot say” – in which case we apply gap analysis to decide what to do to find out what we need to know next. This logical approach is what turns an OSINT gatherer into an OSINT investigator.

This in easy in theory, but in practice our biases and unconscious assumptions sometimes cause us to draw conclusions that are not really supported by the evidence. The human brain does not like gaps, so sometimes we stretch the evidence to fill them even when it is not justified. The hacker we are investigating has a distinctive username, and there’s a guy on a carding forum who has the same username – so it’s definitely the same guy, right? Perhaps, but the truthful answer is “cannot say” – further enquiries would be needed to turn your hypothesis into a certain fact.

Eliot’s article is a good practical example of the how to ask these disciplined questions about the data we have. There is a lot of information about the Gulf Tanker Attacks. Photos of burning ships, reports of torpedoes, flying objects, possible limpet mines on the side of a boat, and some grainy footage of an Iranian boat removing something from the side of a tanker under cover of darkness. This data comes from a variety of sources – but what can we safely conclude, if anything?

In the article the sources of available material are listed without conclusions being drawn. Eliot then asks the questions about the information:

Given the political import and the source of the statement, it is necessary to check the claims made. What can we actually see in the American evidence from the Gulf of Oman?

This is another way of framing the “true, false, or cannot say” question like in the example above. What do we know about the data presented to us? Can we conclude that the video of the Iranian boat crew at the side of the tanker shows with certainty that Iran was responsible for the attacks? It is possible but without further information the only logically correct answer is “cannot say”. Eliot phrases it this way:

Nothing presented as evidence proves that the object was placed there by the Iranians. The video shows only that the Iranians chose to remove it for an as yet unknown reason…we can say for certain this is not the slam-dunk evidence that some would like to claim it is.

Sometimes cannot say, further information required is the most boring answer, but it is often the most truthful.